A warm welcome to the Deeper newsletter, dear reader!

You’re reading this because, just like us, you’re tired of the non-stop tech and startup news cycle, where there seems to be something new and trendy to keep up with in Southeast Asia.

What you want to know about is the important stuff that matters—period.

And that’s what we’re bringing to you. We’ll dive deep into a trending topic in Southeast Asian tech, and their impact on society (i.e. me and you).

Sound compelling? Subscribe now, and we’ll send it straight to your inbox:

I have a confession: I never saw The Matrix trilogy until this year.

Last month, I mentioned AI’s energy problem to my colleagues at With Content, and one of them said it reminds him of The Matrix. I promptly spent the weekend binge-watching the films.

It turns out the scriptwriters were somewhat prescient. More than 20 years have passed since they imagined a world where machines needed so much energy that they resorted to harnessing humans’ bioelectricity.

The first part is true today.

TL;DR

- The Matrix didn’t exaggerate: AI is super power-hungry

- Learning models are getting bloated, and hungrier than ever

- Why Asians should care: The problem is closer to home than you think

- Multiple solutions—can we stop this growing problem in its tracks?

- We’ve already embraced AI, so let’s focus on making it better

The Matrix didn’t exaggerate: AI is super power-hungry

There’s much talk about how AI can solve energy problems:

- Intelligent, predictive systems can automatically adjust power generation to match consumers’ needs

- Smart home systems can study residents’ energy usage habits and learn when to reduce energy usage

- Learning algorithms are being used to reduce the energy needed to cool Google’s data centers

- Deep learning systems can predict failures of offshore wind farms, optimize maintenance, and cut downtime

…and so much more.

But the conversation is shifting to acknowledge how much energy AI systems themselves consume.

Hint: it’s a lot.

Remember AlphaGo, the first computer program to beat a professional player at the board game Go? Its successor, AlphaGo Zero, used less computing power and training time. Still, it’s estimated to have used the same amount of energy needed to keep 12,760 human brains running continuously.

There’s also the algorithm that learned to manipulate a Rubik’s Cube with a robotic hand. To achieve this, researchers at OpenAI in San Francisco ran intensive calculations for several months using more than 1,000 desktop computers and 12 machines with specialized graphics chips, according to a Wired report.

The report also says the energy used was roughly equivalent to “the output of three nuclear power plants for an hour,” based on estimates by Determined AI, a machine-learning startup.

And what about GPT-3 (Generative Pre-trained Transformer 3), the text generator that fooled some readers into thinking its blog posts were human-written? Its energy requirements can power 126 Danish homes for a year, while a single training session for the model produces the carbon footprint of a 700,000-kilometer road trip.

But these examples are of extraordinary feats. How does the average AI model fare?

It’s not exactly conservative, either.

Consider the findings of studies in recent years:

- Training an off-the-shelf AI language-processing system produces emissions equivalent to flying one person from San Francisco to New York, and back. That’s 1,400 pounds of emissions.

- The training phase alone for an average deep-learning model generates around 78,000 pounds of carbon dioxide—more than the carbon footprint of two average American adults. But that’s based on circa-2019 models, which were less complex and used less training data than today’s AI.

- Machine-learning algorithms live in data centers, which accounted for 1% of global electricity use as of 2019. This is roughly the same amount as in 2010, though—which means data centers are becoming more energy-efficient. However, other researchers believe the amount is closer to 3% and could balloon to 10% by 2025.

So there may be reason to believe we’re headed for a dystopian future after all. Fortunately, there’s still time to escape this swelling spiral of energy usage. Run, Neo, run.

Learning models are getting bloated, and hungrier than ever

In pursuit of accuracy, AI models are being trained on ever larger data sets and parameters, so they need more time and energy to process these bits of information. (Parameters are the properties of the training data that a machine learning model learns on its own. Models need them in order to make predictions.)

In natural language processing, parameters include punctuation, special characters, adjectives, nouns, and more—depending on the model’s task application. More parameters typically means more sophisticated language learning and generation. GPT-3, the language generator, uses 175 billion parameters; its predecessor, GPT-2, had 1.5 billion.

While this means GPT-3 can create a wider variety of texts, it’s still prone to basic errors, as the co-founder of its creator, OpenAI, readily admits:

The GPT-3 hype is way too much. It’s impressive (thanks for the nice compliments!) but it still has serious weaknesses and sometimes makes very silly mistakes. AI is going to change the world, but GPT-3 is just a very early glimpse. We have a lot still to figure out.

— Sam Altman (@sama) July 19, 2020

This reflects the reality that the growth of models isn’t proportional to the increase in accuracy. In turn, this means that many models today are inefficient—they require so much to produce so little.

Case in point: for an algorithm to learn whether or not an image shows a cat, it first has to process millions of cat images.

Another: an improved version of one computer vision model used 35% more computational resources to improve accuracy over its predecessor by a mere 0.5%.

Accenture found similar diminishing returns in an experiment. Its model used 964 joules of energy to achieve a training accuracy of 96.17%. But get this—it used at least 15 times more energy to achieve only 2.5% more accuracy!

This may not seem like much. A microwave oven, for example, uses 6,000 joules in one minute of operation. But as Accenture points out: “Remember what we said about machine learning being ubiquitous. If we can save even small amounts of energy each time machine learning models are trained, we could have a significant impact on energy use and sustainability.”

Part of the problem, writes venture capitalist Rob Toews, is that even a fraction of a percent’s increase in accuracy is enough for researchers to gain acclaim. This encourages them to try and build models that set new accuracy records.

Why Asians should care: The problem is closer to home than you think

Asia is becoming a hotbed for AI activity. Japan, South Korea, and China have some of the most number of AI patent filings in the world. India has convinced many global companies to set up AI research centers in the country, and grew its AI workforce from 40,000 in 2018 to 72,000 in 2019. Singapore, Beijing, Tokyo, and Seoul are also building AI hubs.

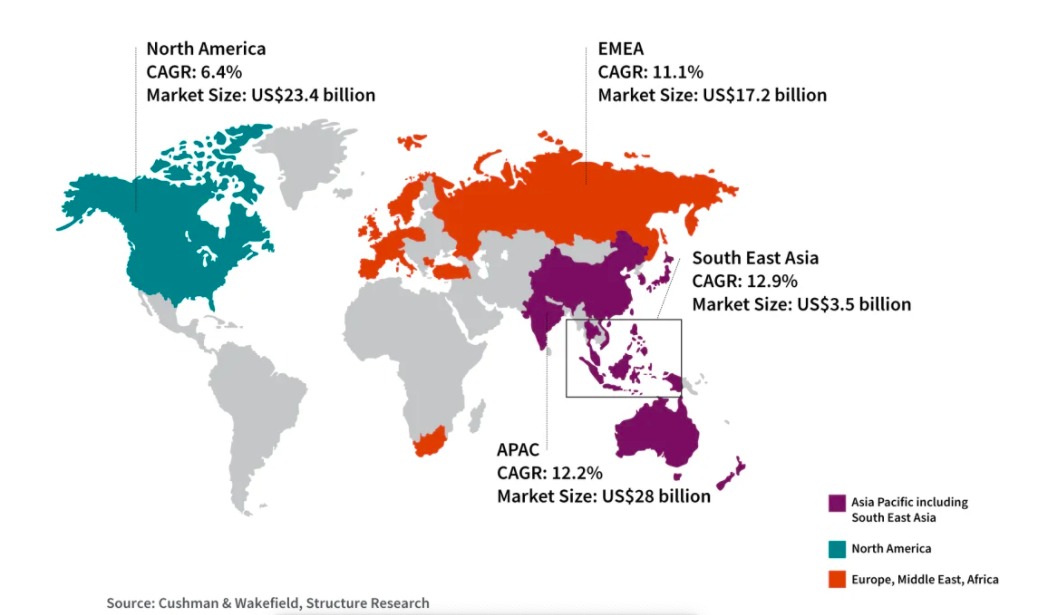

AI-related technology sectors are also booming in the region. Asia accounted for 65% of global industrial robot usage in 2017, according to the International Federation of Robotics. Korea, Singapore, Thailand, China, and Taiwan were found to lead the world in robotics adoption.

Meanwhile, Asia-Pacific has become the fastest-growing cloud computing market in the world. From 2017 to 2020, Alibaba, Amazon, Google, Microsoft, and Tencent increased their data center footprint in the region by almost 70%.

Asia Pacific is also predicted to lead the world in 5G adoption by 2024, and is seen as a global leader in Internet-of-Things (IoT) adoption.

All this means that the problem of AI’s energy consumption is closer to home than you think. Coupled with the fact that Southeast Asia’s power requirements are soaring—the region being one of the fastest-growing in the world in terms of energy demand—and it’s obvious that we face an impending dilemma.

For one, renewable energy meets only 15% of the region’s electricity demand. Most of the power comes from coal, leading to ballooning carbon dioxide emissions.

This, in turn, leads to other problems, such as worsening air pollution. The World Health Organization reports that most countries in Southeast Asia have especially high levels of air pollution, and that this is the main culprit for respiratory disease in children across the region. Poor air quality—both indoors and outdoors—is also believed to cause 650,000 annual premature deaths by 2040, up from 450,000 in 2018.

And as demand begins to outpace production, the region is relying on more fossil fuel imports, prompting concerns about energy security.

Of course, this energy problem has been brewing long before AI and related technologies began flourishing here. Let’s be clear: AI is just a tiny part of the energy problem right now. And Southeast Asian countries, at least, are coming together to ensure the security, affordability, and sustainability of energy in the long term.

But as digital transformation is dominating conversations in governments and businesses, it’s important to shed light on how relevant technologies will affect us in the near and long term. After all, these technologies are advancing rapidly, and the pandemic has only accelerated their adoption.

We’ve entered a phase where AI, robotics, and IoT are no longer visions, but part of real-life, even everyday applications. We’re seeing machine learning penetrating various industries—healthcare, finance, manufacturing, and more. Anyone with a new smartphone has AI in their pocket, built into their camera apps.

As a result, AI has entered mainstream vocabulary and is seen as an inevitable component of the future. As one VC told me, every startup now has an AI component, or will soon have one.

The good news is that the industry is thinking along these lines, too. Industry players are acknowledging the danger and unsustainability of running businesses on AI models at their current pace of energy consumption. As one headline so aptly put it, they’re recognizing that “AI can do great things—if it doesn’t burn the planet.”

Multiple solutions—can we stop this growing problem in its tracks?

Researchers and industry players are taking several paths to achieve more energy-efficient AI.

At the hardware level, tech companies are developing ultra-low-power AI processors. Last year, California-based AI company Eta Compute launched ECM3532, an AI chip for edge computing that uses as little as 100 microwatts to perform tasks like audio and image processing.

A microwatt is equal to one-millionth of a watt; for comparison, energy-saving light bulbs use seven to 15 watts, while typical edge AI processors use 1 to 10 watts.

Meanwhile, companies in AI and related technologies are pledging to offset their carbon footprint. Amazon, Google, and Microsoft have promised to decarbonize their data centers in Asia. Microsoft, in particular, announced it will use 100% renewable energy in all of its data centers by 2025.

But this comes with challenges unique to the region. The tropical climate in Southeast Asia translates to greater cooling needs. In fact, cooling needs currently make up 35% to 40% of energy demand in Southeast Asia-based data centers.

While liquid cooling improves energy efficiency, it also leads to another set of problems related to water consumption and sustainability. The key is to rethink data center design. Facebook, for example, says it designs its data centers to be 80% more water-efficient than average.

There’s also an increasing call for transparency and measurement. Researchers should disclose the amount of energy used in model development and training whenever they publish results of performance and accuracy. How to measure the energy consumption of algorithms is another question, but it’s a debate that’s producing practical suggestions.

Still, all of these measures will have less impact if learning models continue to be inefficient. Researchers need to develop ways to train AI models more efficiently, starting with reducing the number of unnecessary experiments.

Experts point to nature as an inspiration for efficiency, with the human brain being the best example.

Consider this: it took a data set of 3.3 billion words—read not once, but 40 times—to train a language network called BERT (Bidirectional Encoder Representations from Transformers). By comparison, children hear 45 million words by age five, which is 3,000 times fewer, points out Kate Saenko, Associate Professor of Computer Science, Boston University.

Proving nature’s point, an international research team recently took inspiration from the brains of tiny animals to create a simple neural network. They developed an AI system that could control a vehicle using a few artificial neurons, maximizing the model’s efficiency. While typical deep-learning models used for autonomous driving require millions of parameters, the team’s system uses only 75,000 trainable parameters.

It’s also important to recognize that more data doesn’t automatically produce better results. A focus on enhancing the quality of data will come a long way not only in improving accuracy, but also in reducing human biases in deep-learning models.

We’ve already embraced AI, so let’s focus on making it better

When 2021 ends, The Matrix fans will get to watch its fourth instalment. I wonder what modern-day message or warning the scriptwriters will embed into the story. But for us living in the real world (yes, let’s say our reality is the reality 😉 ), we have bigger, immediate concerns for what 2021 will bring.

We’ve already seen the potential of deep tech to do good, especially with the rapid development of Covid-19 vaccines and the advancements in telehealth prompted by the pandemic. As we embrace the promises of deep tech like AI, let’s keep an eye open to the possible pitfalls too, and support those who are trying to solve or prevent them.